The usability of Bayesian networks

Statistics! No matter what you think about it, no matter if you like it or not, you cannot say that statistics isn’t useful! If you haven’t worked with stati...

Originally posted on 2015-04-22.

Imagine that you’re standing in your living room with your eyes closed. Now, you might have a mental map of the room and where the furniture are, but would you be able to move around the room without looking and without touching objects to feel where you are? How many steps would you have to take before you’re no longer sure exactly where you are? Not that many, I imagine.

When you navigate through your home, you constantly (but unconsciously) scan the environment and update your mental map of where you are. This helps you avoid bumping into objects, or walking into the wrong rooms. It’s no surprise that autonomous robots need to do the same scanning and mental-map-recalibration to successfully navigate in an environment. This is called ‘localization’.

In a university course I am currently taking and which deals with algorithms for mobile robots, localization is one of the covered techniques. Just like a human can use her perceived step length to estimate where she is even though her eyes are closed, a wheeled robot can estimate its position based on the wheels’ movement. But just like you would become more uncertain of your exact position the longer you walk with closed eyes, the robot’s estimated position will become less and less accurate unless it makes a scan of the environment and recalibrates itself. The estimated position might drift because the wheels slip or the movement calculations are inexact. Regardless of how the error arises, once there it will only grow larger. And a robot which relies on its position in order to navigate will likely start bumping into obstacles when it actually tries to dodge them. Unless it utilizes localization, that is.

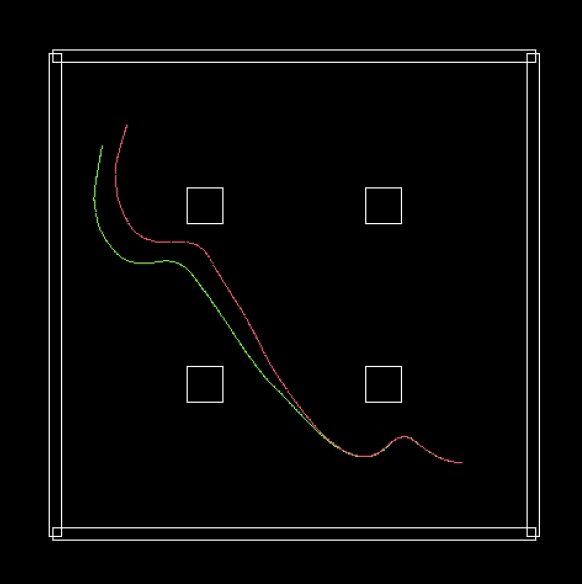

An illustration of the need for localization in mobile robot navigation. Here, the robot navigates without recalibrating its position in any way. As a result, the perceived trajectory (red) deviates quite significantly from the actual (green) trajectory. Figure adapted from “Introduction to Autonomous Robots” by professor Mattias Wahde. See the references below for more details.

In the course I mentioned above, the students get to implement navigation using localization in Matlab. We use the simple simulator ARSim (Autonomous Robot Simulator) to program a differentially-steered robot to navigate an arena using potential-field navigation. The robot uses a laser range finder (LRF) to determine the distances to nearby walls and obstacles, and also simulates what an LRF reading from its estimated position should be. By comparing the actual LRF readings to the simulated readings, the robot gets a sense of how large the error of the estimated position is. If the error is too large, then it is a good idea to run the localization process and recalibrate the estimated position.

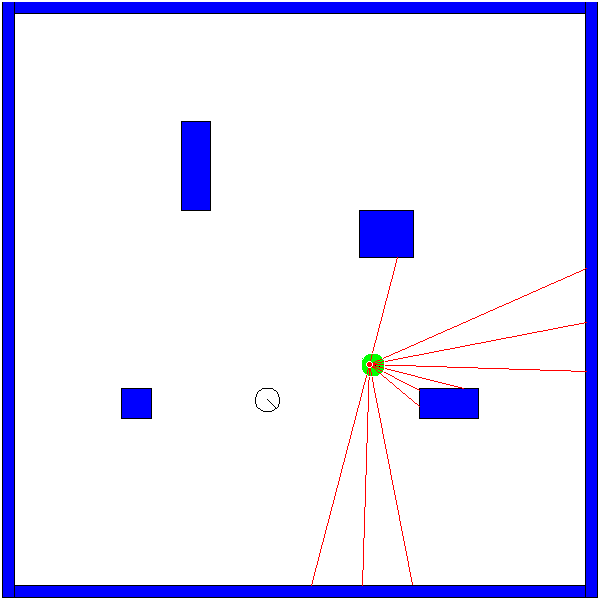

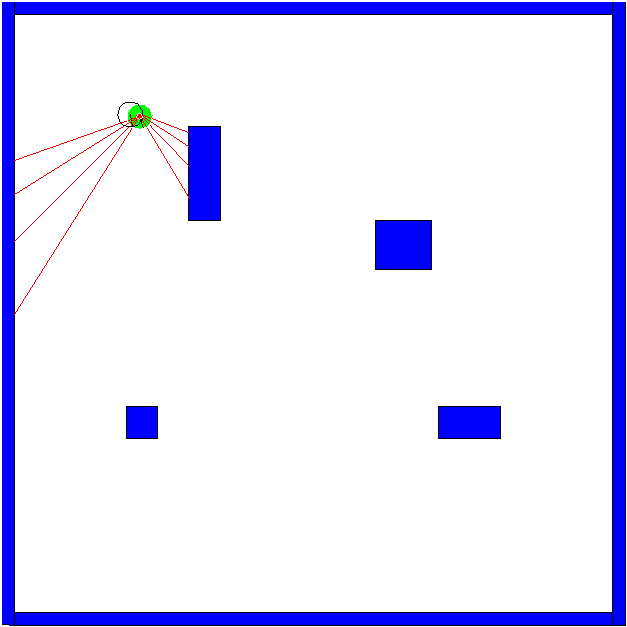

A snapshot from a simulation in ARSim. The robot (portrayed by the green circle) must navigate through the arena and avoid the obstacles (blue). Since no recalibration has been used, the estimated position (black circle) of the robot has drifted from the actual position, and while the robot believes it is far away from any obstacles, it is heading straight into one. The red lines represent the laser rays.

Now, in order to recalibrate the position, what we really want to do is find a robot pose (position + heading) in the mental map of the robot that minimizes the error. Since the error is defined as the difference between the actual LRF reading and the simulated LRF reading, the error should be minimized when the robot’s perceived pose is the same as the actual pose. For a robot that moves in two dimensions, this turns out to be a three-dimensional optimization problem (optimizing the x position, y position and heading). In this context, even a simple search algorithm works well. Assuming that our estimated pose already is pretty close to the actual pose (the error is not too large), we can just run a search in the vicinity of the estimated pose. In our simulation, we just pick a random pose close to the estimated one. If it turns out to be an improvement, it replaces the estimated pose, otherwise we discard it and try with a new random pose. Then we just have to continue this process until the error is below a threshold or a certain number of search iterations have been run. Surprisingly, even a naive search algorithm such as this works pretty good. In the absolutely worst case we have failed to find a better estimated pose, but most likely we have found a better one.

By checking the error of the estimated position every third second and (if needed) running the recalibration process, the estimated position stays close to the actual one and the robot can move through the arena without running into obstacles.

This method of doing localization assumes that we have a very accurate map of the environment that the robot can use to simulate LRF readings from various poses. This may be a severe drawback since the robot won’t be able to navigate through environments of which there aren’t any maps. On the other hand, if you encounter the problem of navigating without any sort of map you’re generally already on a more advanced level than what is covered in the course I mentioned earlier. If you come across this, you will likely have to look more into what is known as SLAM (Simultaneous Localization And Mapping). However, in many cases, for example a robot navigating through a hospital, it would be enough to just do the preprocessing of acquiring an accurate map (e.g. by manually laser scanning the area) before setting the robot to its task.

This method also relies on some parameters that must be set to reasonable values, for example an error threshold that determine whether the recalibration should be run or not. The robot could even run the recalibration continuously and take its movement into account, thereby removing the need for stopping, scanning, and checking an error threshold before recalibrating. The search algorithm, in which the robot finds a better estimated pose, can also definitely be improved as needed. There are lots of efficient search algorithms and optimization techniques for finding a good estimated pose in a three-dimensional space. All in all, this method for localization is rather robust, as well as easy to implement and extend.

(EDIT: while updating this post in early 2018, it seems the links below are unfortunately no longer valid)

Course webpage for Autonomous Agents: http://www.me.chalmers.se/~mwahde/courses/aa/2015/aa.html

Wahde, Mattias. “Introduction to autonomous robots.” Department of Applied Mechanics, Chalmers University of Technology, Goteborg, Sweden (2012). Link: http://www.me.chalmers.se/~mwahde/courses/aa/2012/Wahde_IntroductionToAutonomousRobots.pdf

Statistics! No matter what you think about it, no matter if you like it or not, you cannot say that statistics isn’t useful! If you haven’t worked with stati...

Imagine that you’re standing in your living room with your eyes closed. Now, you might have a mental map of the room and where the furniture are, but would y...

Yay! At last! The old ‘work-in-progress’ HTML site is gone from plankter.se, and instead this is here. Everything is not in place yet. I am still missing the...

Right, so I’m working on updating my website to a working condition again and am testing a framework called Jekyll.